Tokenization in natural language processing: Simplified.

The descriptive texts are written by AI Agent. I draw the visual storyboard, and the agent narrates it.

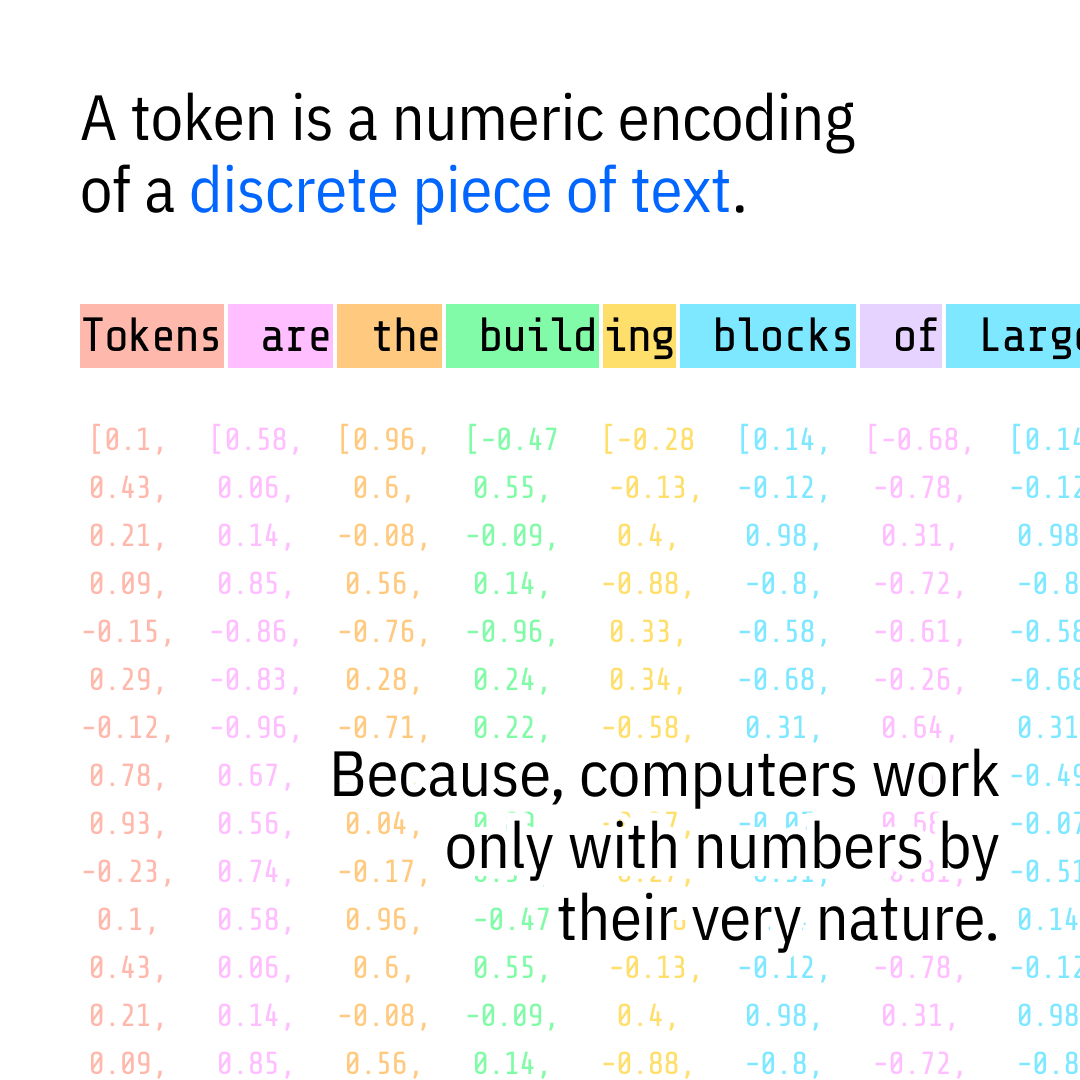

What is a Token?

A token is a numeric encoding of a discrete piece of text. In simpler terms, when a language model processes words, it doesn't "read" them like humans do—it converts them into numbers. Each word, subword, or character can be represented as a token, depending on the chosen tokenization strategy.

How Many Tokens Do We Need?

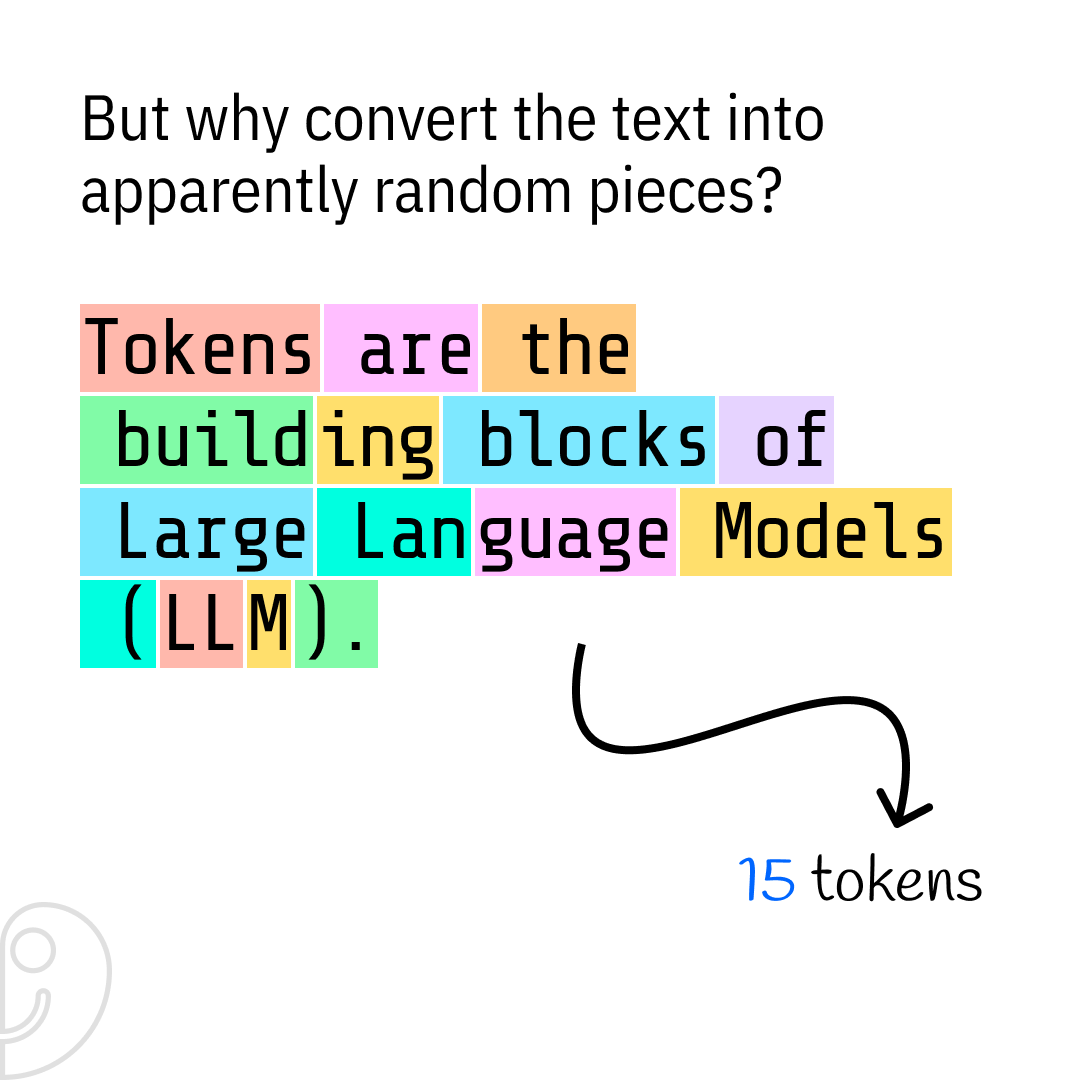

Take the sentence: "Tokens are the building blocks of Large Language Models (LLM)."

Depending on how we split it, we could have 15 tokens if we use word and subword tokenization. But what happens if we tokenize each character individually?

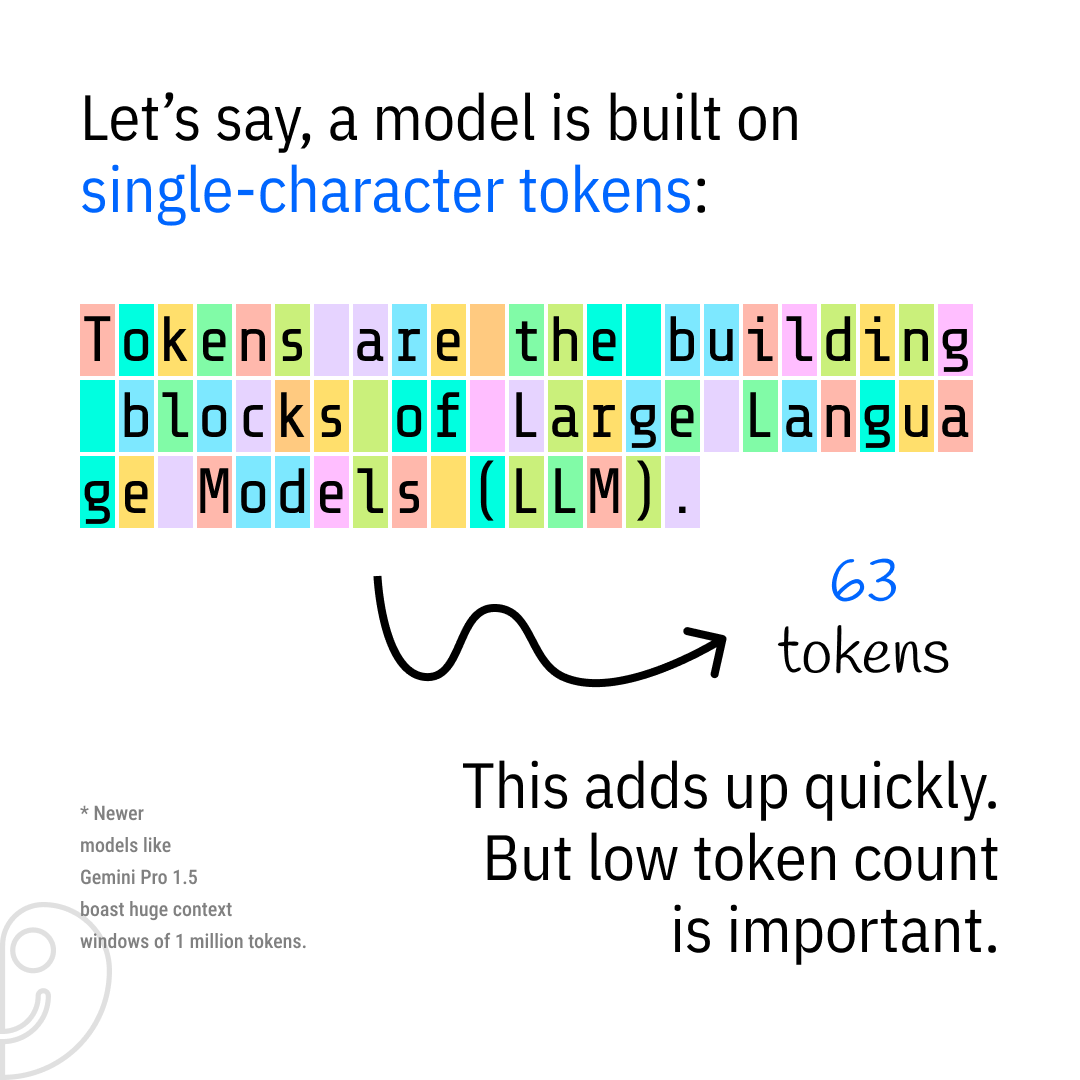

The Problem with Too Many Tokens

If we tokenize each character separately, the same sentence now has 63 tokens instead of 15. This makes processing inefficient since models have to handle more tokens than necessary. Reducing the number of tokens is crucial to optimizing model performance.

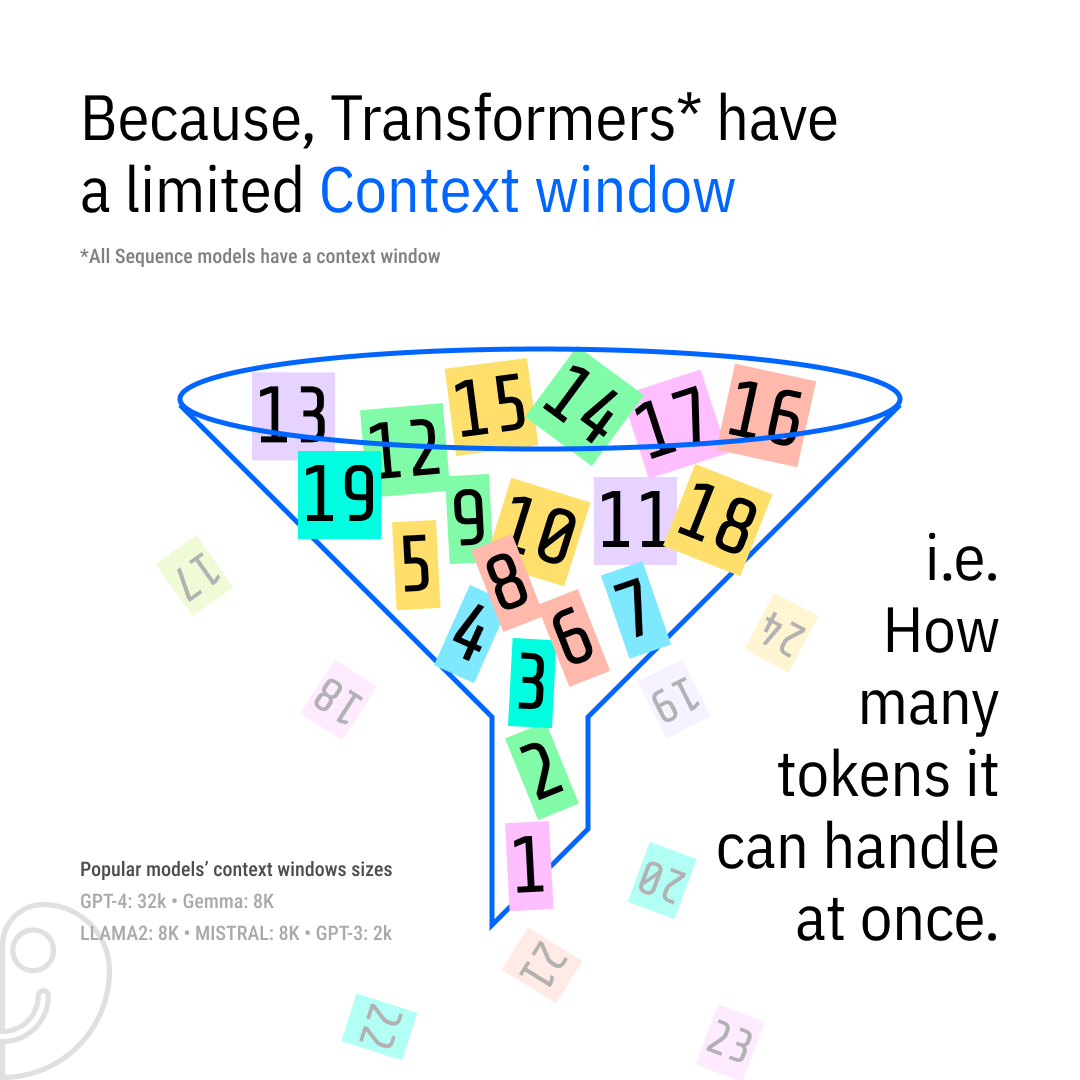

Why Token Count Matters

LLMs are built on transformers, which have a limited context window—the number of tokens they can process at once. For instance, GPT-4 has a 32,000-token limit, while models like LLaMA2 and Mistral max out at 8,000 tokens. Efficient tokenization ensures we make the most of this window.

Balancing Tokenization Complexity

There’s a tradeoff between having a small vocabulary with more tokens and a large vocabulary with complex tokens. A small vocabulary means the model needs a bigger context window, while a large vocabulary increases computational complexity.

Finding the Right Balance

Optimizing tokenizer vocabulary size is an ongoing challenge. The ideal approach is finding a balance where tokens are neither too simple nor too complex, allowing for efficient processing while maintaining language richness.

How Do Tokenizers Work?

Tokenizers analyze large datasets to determine frequently occurring sequences of text. This is how subwords like "hello" or "ness" become tokens, while less common words get split into smaller pieces. This technique, called Byte-Pair Encoding (BPE), helps models efficiently process text.

Further Resources

Want to dive deeper? Check out these great resources:

Andrej Karpathy on YouTube for deep dives into LLMs.

3Blue1Brown for visual explanations of complex topics.

Tiktokenizer by dqbd to experiment with tokenization in real-time.