Unlocking Knowledge with Retrieval-Augmented Generation (RAG)

The descriptive texts are written by AI Agent. I draw the visual storyboard, and the agent narrates it.

Large Language Models (LLMs) are powerful tools, but they have limitations—they can only generate responses based on the data they were trained on. What happens when we need information beyond their training? That’s where Retrieval-Augmented Generation (RAG) comes in. Let’s explore how RAG enhances LLMs by integrating real-time knowledge retrieval.

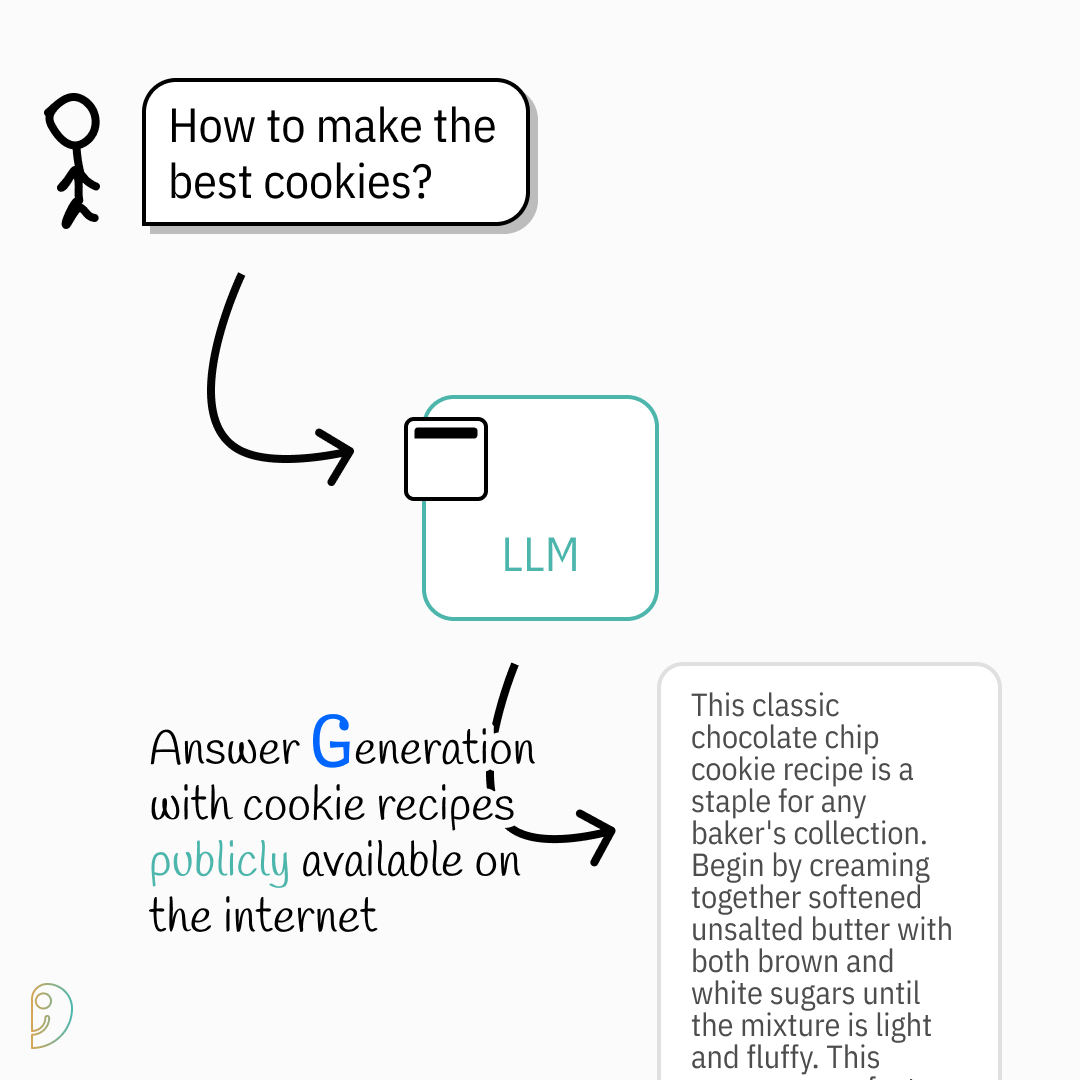

From General Knowledge to Personalized Answers

When you ask an LLM how to make the best cookies, it pulls from publicly available information—like generic cookie recipes from the internet. While this works for common knowledge, what if you want something more special, like grandma’s secret recipe?

Enhancing Queries with External Knowledge

Instead of relying solely on what the model knows, RAG allows us to augment our query. By specifying that we want “a few secrets from grandma’s book,” the system can retrieve private, specialized knowledge, ensuring a more personalized and enriched response.

Handling Large Documents with Limited Context

LLMs have a limited context window, meaning they can only process a certain amount of text at once. Instead of feeding an entire book into the model, we extract only the most relevant parts, ensuring the LLM focuses on what's truly important.

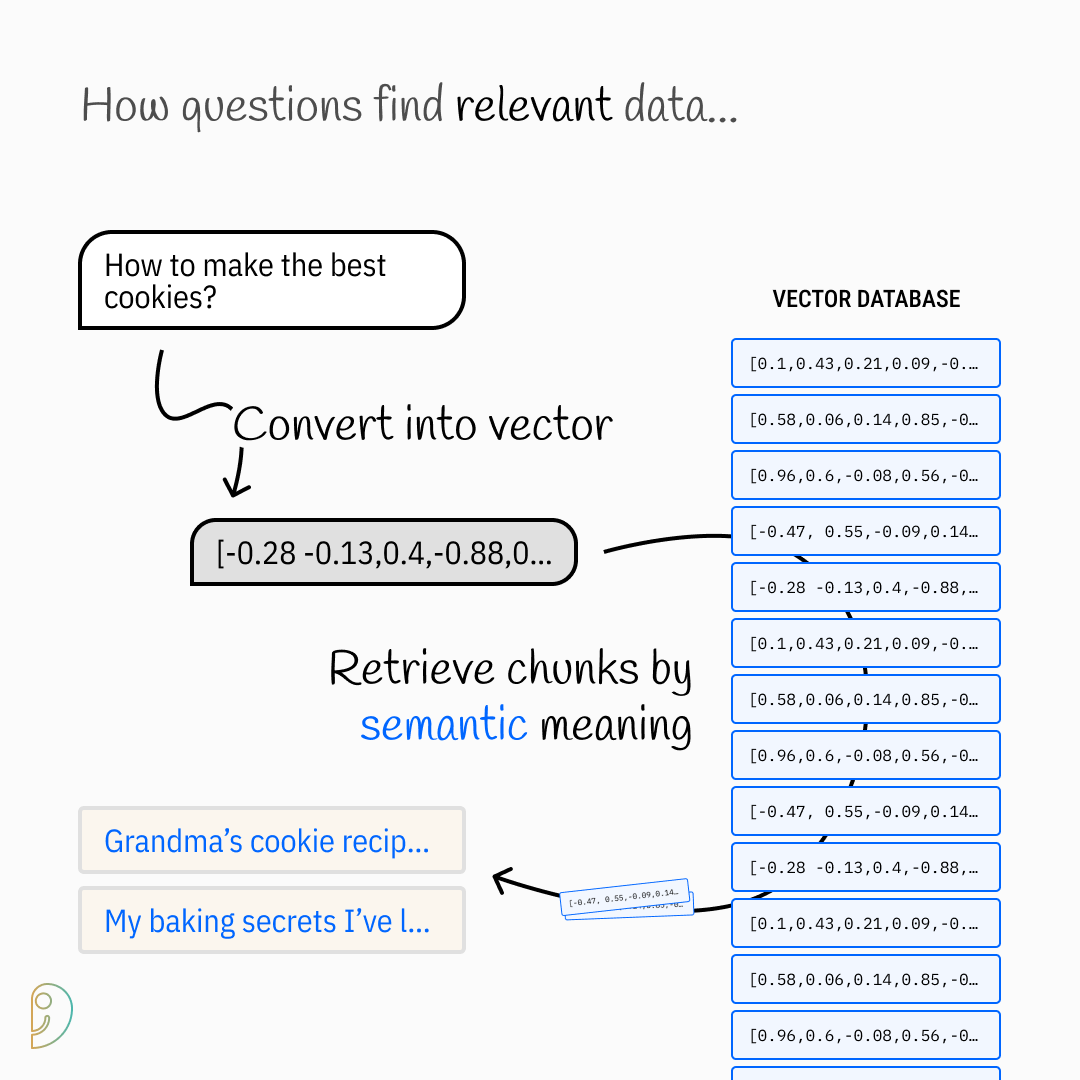

Storing Knowledge Efficiently with Vector Databases

To make retrieval efficient, we first break documents into smaller chunks and store them in a vector database. These chunks are transformed into vector embeddings, which capture their meaning, making it easier to search for relevant information later.

Retrieving Relevant Information by Semantic Meaning

When a user asks, “How to make the best cookies?”, the query is also converted into a vector. The system then searches the vector database for the most semantically relevant chunks, ensuring it finds the best match rather than just keyword-based results.

Merging Retrieved Knowledge with LLM Capabilities

Now that we have retrieved grandma’s secret recipe, the LLM combines this proprietary knowledge with its language abilities to generate a meaningful and enriched response—something a standard LLM alone couldn’t achieve.

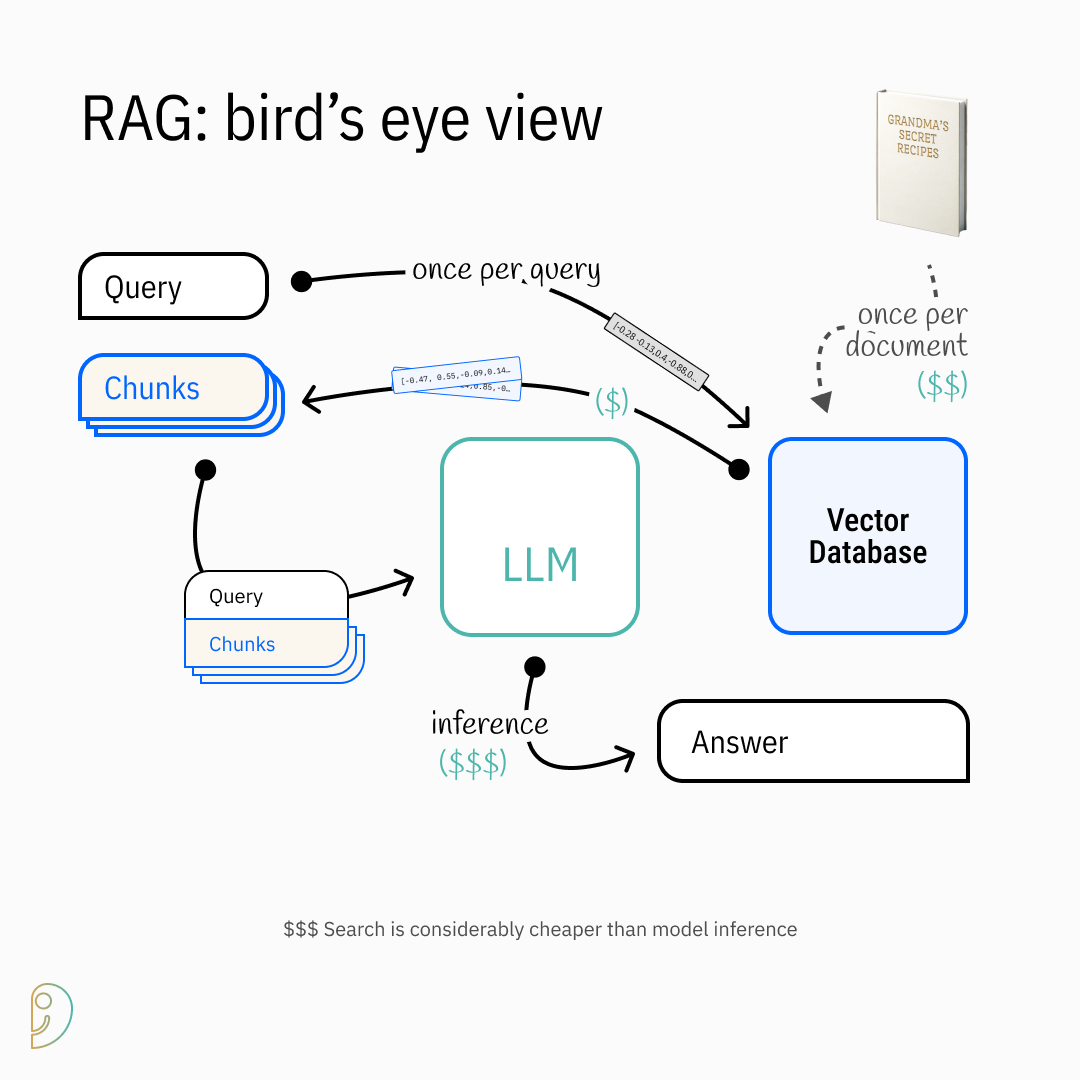

The Bigger Picture: RAG in Action

From a bird’s-eye view, RAG involves querying, retrieving relevant chunks, and feeding them into the LLM for response generation. This approach is not only more accurate but also cost-effective since retrieval is cheaper than model inference.

Why RAG is the Future of AI-Powered Knowledge

Compared to traditional LLMs, RAG enables real-time retrieval, proprietary knowledge access, continuous updates, and reduced hallucination risks—all while maintaining the LLM’s ability to generate text. It’s a powerful technique that blends the best of search and generation.

Continue Your Learning

RAG is a game-changing approach that enhances LLMs by retrieving real-time, proprietary, and relevant knowledge. If you're interested in implementing RAG or diving deeper, here are some great resources to explore:

OpenAI Cookbook: A hands-on guide to question answering using embeddings-based search.

@IBMTechnology: A YouTube video explaining Retrieval-Augmented Generation.

@MervinPraison: Quick code examples to help you get started with RAG implementations.

Stay curious, keep experimenting, and explore how RAG can transform your AI applications!

Follow me on LinkedIn: linkedin.com/in/saadchdhry